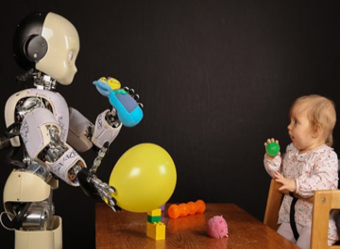

Interaction and communication between people and machines (e.g. with robots, autonomous vehicles) benefit from a multimodal approach combining a series of verbal and non-verbal strategies. For example, non-verbal strategies such as pointing gestures or gaze direction, complement linguistic instructions and support a better assessment of the other agent’s intention. Moreover, the autonomous system can itself interpret and predict the behavior and intention of the people by observing their behavior. The use of non-verbal strategies can further support the building of trust relationship between people and machines.

In the talk we will present a set of human-machine interaction experiments and robot learning architectures to investigate the dynamic combination of verbal and non-verbal strategies to support communication and trust in joint tasks between the user and the robot. A set of studies will also specifically focus on the issue of trust from two perspectives. In the first case, we investigate how the behavior of the machine increases the person’s trust of the agent’s recommendations (Zanatto et al. 2016). In the second case, we propose a cognitive architecture that the robot uses to create a “theory of mind” of various human users, and which allows it to modify and personalise its behavior (Patacchiola & Cangelosi 2016). Such studies are based on the approach of developmental robotics (Cangelosi & Schlesinger 2015), which takes direct inspiration from human development theories and from the embodied and situated view of cognition (Pezzulo et al. 2013).